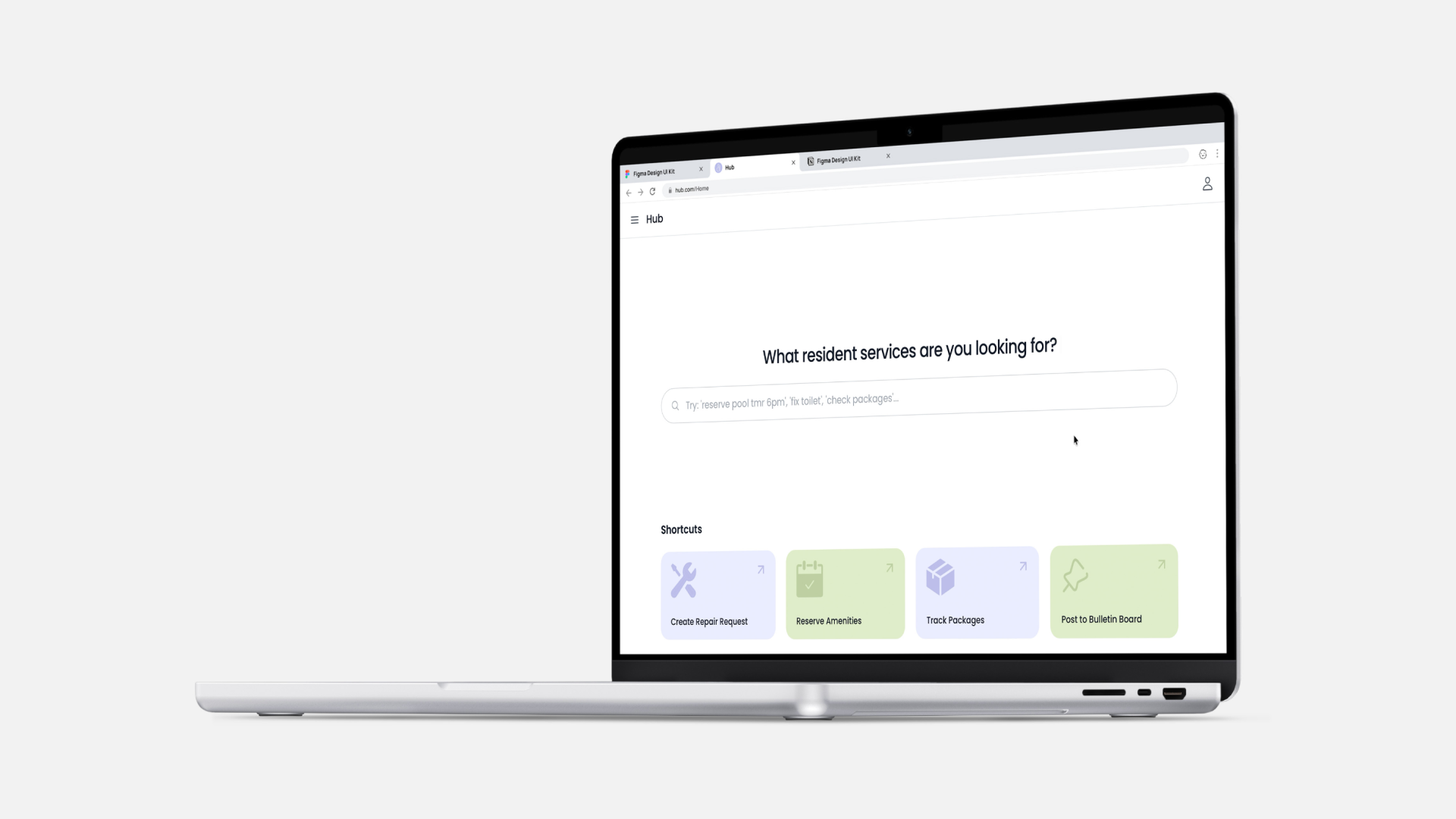

Hub is a resident services platform inspired by BuildingLink that unifies essential services such as submitting repair requests and reserving amenities into one simple, cohesive experience for residents. The project was initially designed in Figma and delivered as a functional website, with a high-fidelity, working implementation that demonstrates its core features in real usage. I owned the project from concept to production, leading all design and front-end development.

.gif)

.gif)